There is one thing that needs special attention, which is non max suppression. argmax ( tcls, 1 )) loss = loss_x + loss_y + loss_w + loss_h + loss_conf + loss_cls Detect ObjectsĪfter the model has been trained with enough epochs, we can use the model to detect object. mse_loss ( pred_conf, tconf ) loss_cls = ( 1 / batch_size ) * self. In the code, the calculation of losses are written as: This is also mean square error.ģ) Classification error, which uses cross entropy loss. When there is an object, we want the score equals to IOU, and when there is no object we want to socore to be zero. As we discussed earlier:ġ) Bounding box coordinates error and dimension error that is represented using mean square error.Ģ) Objectness error which is confidence score of whether there is an object or not. Now that the predicted values and target values have the same format, we can then calculate the loss. For more information, go to buildTargets in utils.py We also need to output confidence and class label which is easy to determine. $tw$ and $th$ are expressed this way because in YOLO V2 paper: In the model config file we can see some like this:

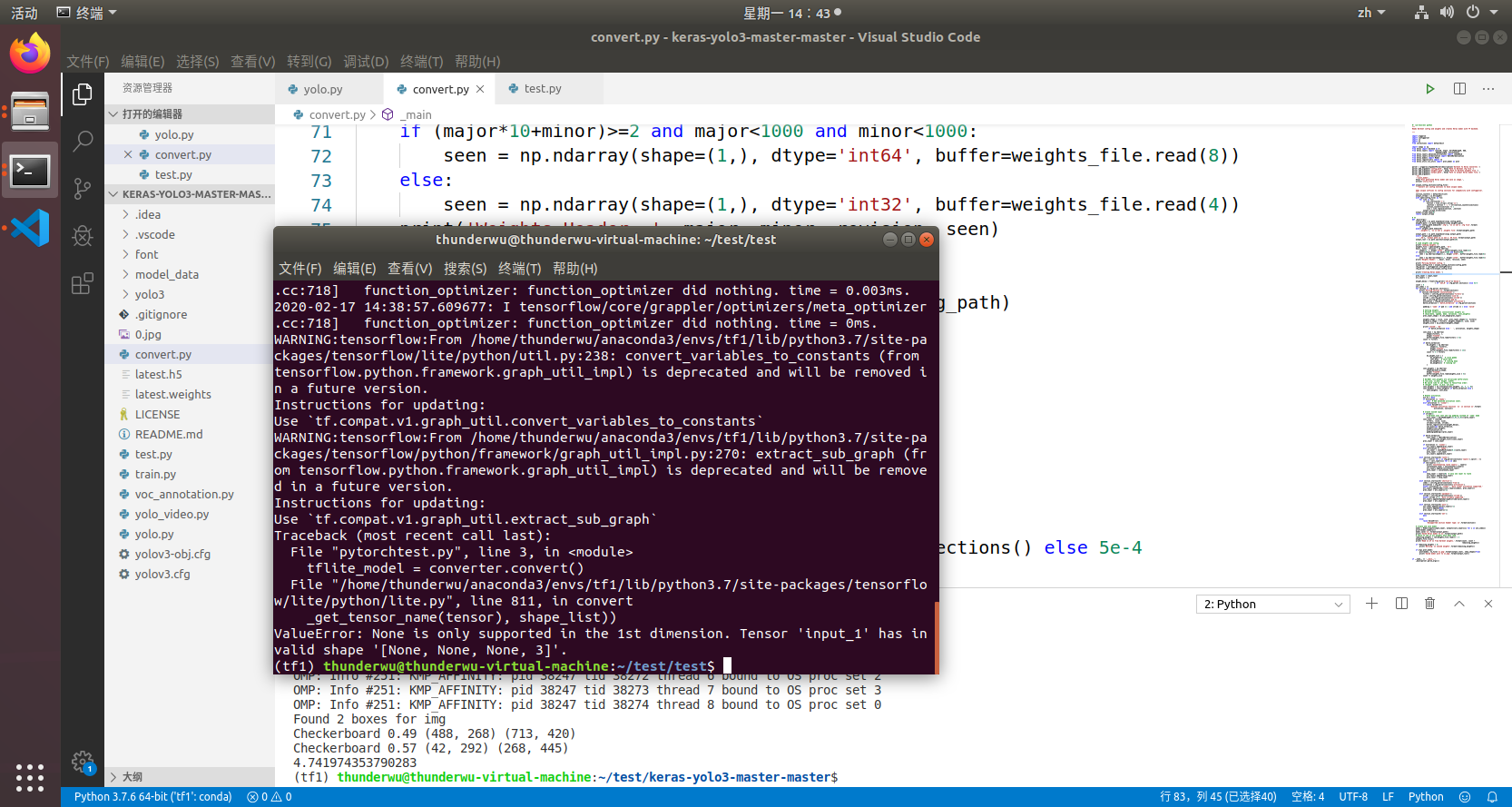

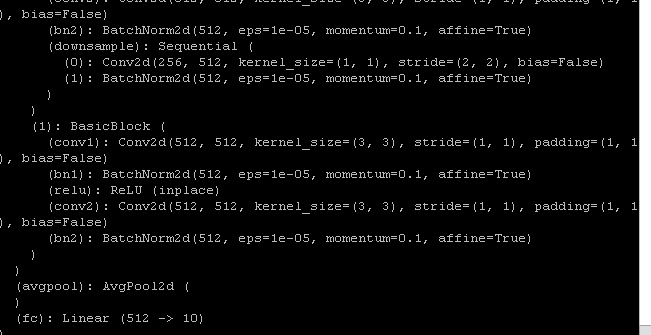

In the model config file we can see different blocks that builds up the network. The first module to look at is the structure of the neural network, which consists of several blocks. In this section, I will talk about the key implementation points on YOLO V3. Put it all together, the loss function is formally proposed as: To partially address this the authors predict the square root of the bounding box width and height instead of the width and height directly. The error metric should reflect that small deviations in large boxes matter less than in small boxes. The loss function also equally weights errors in large boxes and small boxes. These confidence scores reflect how confident the model is that the box contains an object and also how accurate it thinks the box is that it predicts.Ĭonfidence is formally defined as $Pr(Object)*IOU^=0.5$. Each grid cell predicts B bounding boxes and confidence scores for those boxes. If the center of an object falls into a grid cell, that grid cell is responsible for detecting that object. It divides the input image into an $S\times S$ grid. YOLO uses features from the entire image to predict bounding boxes across all classes for an image simultaneously. Compared to fast R-CNN, YOLO makes less than half the number of background errors.

Unlike sliding window and region proposal-based techniques, YOLO sees the entire image during training and test time so it implicitly encodes contextual information about classes as well as their appearance. Second, YOLO reasons globally about the image when making predictions. Since the detection is framed as a regression problem, YOLO does not need a complex pipeline and it only uses a single network, so it can be optimized end-to-endĭirectly on detection performance, reporting 45 fps without batch processing on a Titan X GPU.

#Pytorch yolov3 finetune full#

YOLO, on the other hand, uses a single neural network predicts bounding boxes and class probabilities directly from full images in one evaluation, so it can be optimized end-to-end directly on detection performance.įirst, YOLO is very fast. After classification, post-processing is used to refine the bounding boxes, eliminate duplicate detections, and rescore the boxes based on other objects in the scene. It first uses region proposal methods to first generate potential bounding boxes in an image, then it runs a classifier on these proposed boxes. R-CNN consists of three stages when performing detection, which makes it hard to optimize.

Unlike many other object detection system, such as R-CNN, YOLO frames object detection as a regression problem to spatially separated bounding boxes and associated class probabilities. The You Only Look Once (YOLO) object detection system is developed by Joseph Redmon, Santosh Divvala, Ross Girshick and Ali Farhadi.

0 kommentar(er)

0 kommentar(er)